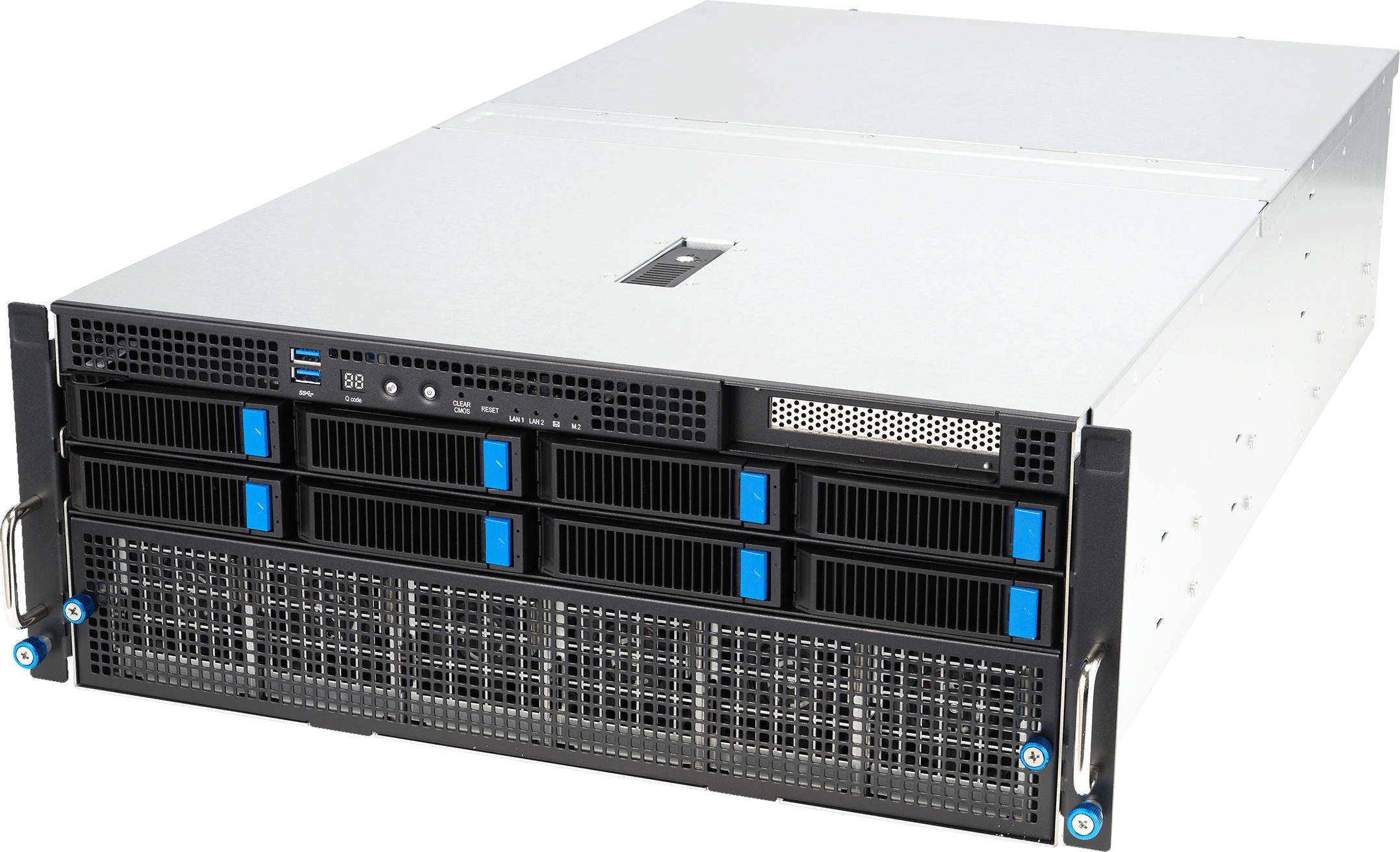

H: 6.87″ (174.5 mm) x W: 17.3″ (440 mm) x D: 31.5″ (800 mm)

The Powerworks Matrix A1 is designed to meet the demands of cutting-edge AI infrastructure. With support for massive memory bandwidth, PCIe Gen5, and up to eight B200 NVL GPUs, it delivers top-tier compute performance for the most advanced enterprise, research, and cloud-scale AI workloads. Its efficient 4U chassis supports dense GPU configurations without compromising airflow or reliability.

Train GPT-4/5-class models with maximum compute density and GPU acceleration. Designed for enterprise technology solutions and research-grade AI labs.

Accelerates complex simulations across physics, life sciences, and industrial research requiring precision and scale.

Empowers cloud providers to offer AI workloads at scale, from inference pipelines to full model training as a service.

192 cores of parallel processing power designed for compute-intensive AI and HPC tasks.

Handles massive AI datasets and complex simulations with high-speed, error-correcting memory.

Blazing-fast I/O with RAID-configured NVMe for model checkpointing, datasets, and AI pipelines.

Ideal for AI research labs, tech enterprises, and cloud AI providers scaling generative AI models.

Reliable, power-efficient dual-supply design for mission-critical uptime and load balancing.

Micro-Tower

Height: 14.5″ (368.3mm)

Width: 7.5″ (190.5mm)

Depth: 15.5″ (394mm)